tags:

aliases:

- NetworkTablesRobot Radio

The official radio documentation is complete and detailed, and should serve as your primary resource.

https://frc-radio.vivid-hosting.net/

However, It's not always obvious what you need to look up to get moving. Consider this document just a simple guide and jumping-off point to find the right documentation elsewhere

Setting up the radio for competition

You don't! The Field Technicians at competitions will program the radio for competitions.

When configured for competition play, you cannot connect to the radio via wifi. Instead, use an ethernet cable, or

Setting up the radio for home

The home radio configuration is a common pain point

Option 1: Wired connection

This option is the simplest: Just connect the robot via an ethernet or USB, and do whatever you need to do. For quick checks, this makes sense, but obviously is suboptimal for things like driving around.

Option 2: 2.4GhZ Wifi Hotspot

The radio does have a 2.4ghz wifi hotspot, albeit with some limitations. This mode is suitable for many practices, and is generally the recommended approach for most every-day practices due to ease of use.

Note, this option requires access to the tiny DIP switches on the back of the radio! You'll want to make sure that your hardware teams don't mount the radio in a way that makes this impossible to access.

Option 3: Tethered Bridge

This option uses a second radio to connect your laptop to the robot. This is the most cumbersome and limited way to connect to a robot, and makes swapping who's using the bot a bit more tricky.

However, this is also the most performant and reliable connection method. This is recommended when doing extended driving sessions, final performance tuning, and other scenarios where you're trying to simulate competition-ready environments.

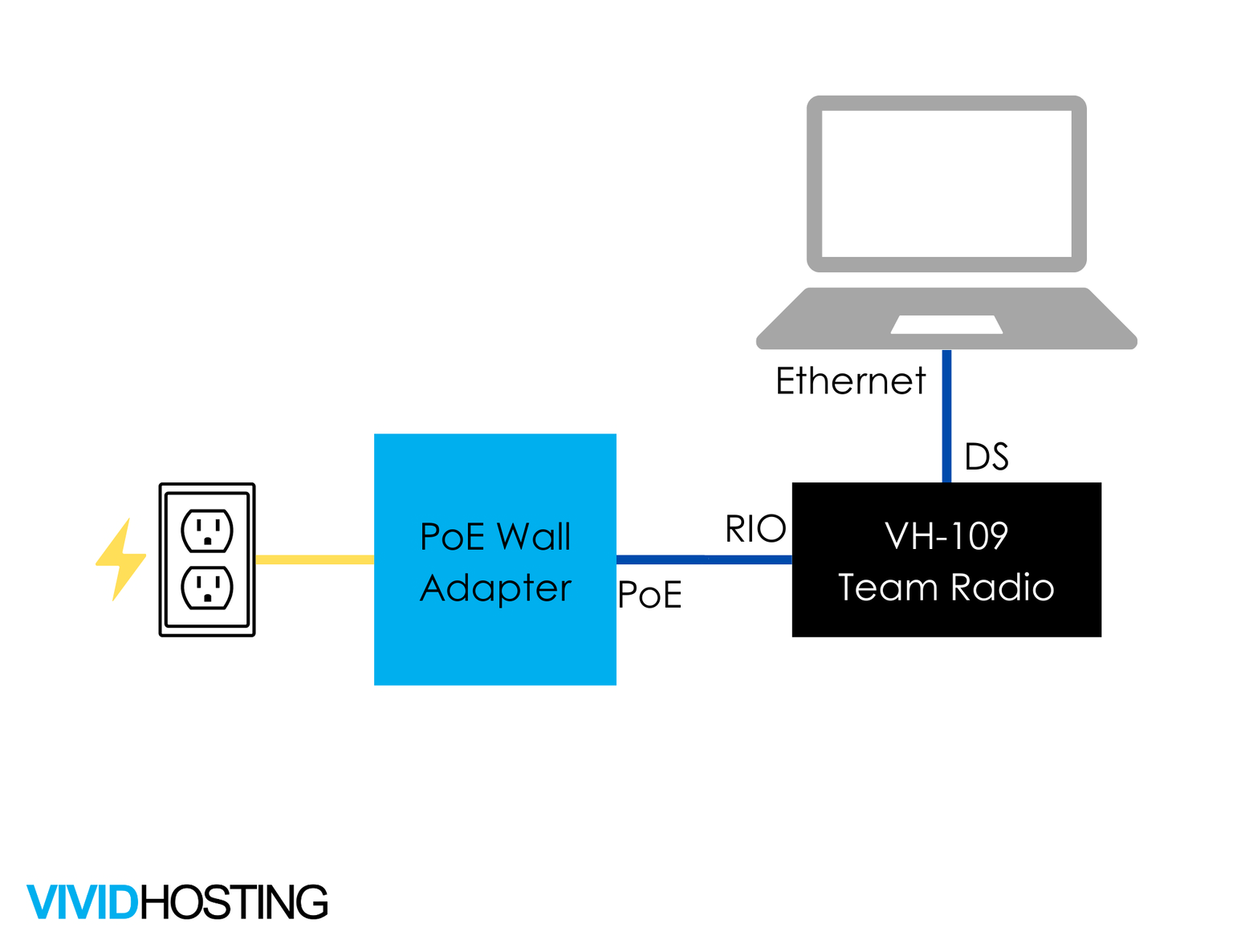

This option has a normal robot on one end, and your driver-station setup will look the following image. See https://frc-radio.vivid-hosting.net/overview/practicing-at-home for full setup directions

Bonus Features

Port Forwarding

Port forwarding allows you to bridge networks across different interfaces.

The practical application in FRC is being able to access network devices via the USB interface! This is mostly useful for quickly interfacing with Vision hardware like the Limelight or Photonvision at competitions.

//Add in the constructor in Robot.java or RobotContainer.java

// If you're using a Limelight

PortForwarder.add(5800, "limelight.local", 5800);

// If you're using PhotonVision

PortForwarder.add(5800, "photonvision.local", 5800);

Scripting the radio

The radio has some scriptable interfaces, allowing programmatic access to quickly change or read settings.

Basic Telemetry

Goals

Understand how to efficiently communicate to and from a robot for diagnostics and control

Success Criteria

- Print a notable event using the RioLog

- Find your logged event using DriverStation

- Plot some sensor data (such as an encoder reading), and view it on Glass/Elastic

- Create a subfolder containing several subsystem data points.

As a telemetry task, success is thus open ended, and should just be part of your development process; The actual feature can be anything, but a few examples we've seen before are

Why you care about good telemetry

By definition, a program runs exactly as you the code was written to run. Most notably, this does not strictly mean the code runs as it was intended to.

When looking at a robot, there's a bunch of factors that can have be set in ways that were not anticipated, resulting in unexpected behavior.

Telemetry helps you see the bot as the bot sees itself, making it much easier to bridge the gap between what it's doing and what it should be doing.

Printing + Logging

Simply printing information to a terminal is often the easiest form of telemetry to write, but rarely the easiest one to use. Because all print operations go through the same output interface, the more information you print, the harder it is to manage.

This approach is best used for low-frequency information, especially if you care about quickly accessing the record over time. It's best used for marking notable changes in the system: Completion of tasks, critical events, or errors that pop up. Because of this, it's highly associated with "logging".

The methods to print are attached to the particular print channels

//System.out is the normal output channel

System.out.println("string here"); //Print a string

System.out.println(764.3); //you can print numbers, variables, and many other objects

//There's also other methods to handle complex formatting....

//But we aren't too interested in these in general.

System.out.printf("Value of thing: %n \n", 12);

A typical way this would be used would be something like this:

public ExampleSubsystem{

boolean isAGamePieceLoaded=false;

boolean wasAGamePieceLoadedLastCycle=false;

public Command load(){

//Some operation to load a game piece and run set the loaded state

return runOnce(()->isAGamePieceLoaded=true);

}

public void periodic(){

if(isAGamePieceLoaded==true && wasAGamePieceLoadedLastCycle==false){

System.out.print("Game piece now loaded!");

}

if(isAGamePieceLoaded==false && wasAGamePieceLoadedLastCycle==true){

System.out.print("Game piece no longer loaded");

}

wasAGamePieceLoadedLastCycle=isAGamePieceLoaded

}

}

Rather than spamming "GAME PIECE LOADED" 50 times a second for however long a game piece is in the bot, this pattern cleanly captures the changes when a piece is loaded or unloaded.

In a more typical Command based robot , you could put print statements like this in the end() operation of your command, making it even easier and cleaner.

The typical interface for reading print statements is the RioLog: You can access this via the Command Pallet (CTRL+Shift+P) by just typing > WPILIB: Start Riolog. You may need to connect to the robot first.

These print statements also show up in the DriverStation logs viewer, making it easier to pair your printed events with other driver-station and match events.

NetworkTables

Data in our other telemetry applications uses the NetworkTables interface, with the typical easy access mode being the SmartDashboard api. This uses a "key" or name for the data, along with the value. There's a couple function names for different data types you can interact with

// Put information into the table

SmartDashboard.putNumber("key",0); // Any numerical types like int or float

SmartDashboard.putString("key","value");

SmartDashboard.putBoolean("key",false);

SmartDashboard.putData("key",field2d); //Many built-in WPILIB classes have special support for publishing

You can also "get" values from the dashboard, which is useful for on-robot networking with devices like Limelight, PhotonVision, or for certain remote interactions and non-volatile storage.

Note, that since it's possible you could request a key that doesn't exist, all these functions require a "default" value; If the value you're looking for is missing, it'll just give you the provided default.

SmartDashboard.getNumber("key",0);

SmartDashboard.getString("key","not found");

SmartDashboard.getBoolean("key",false);

Networktables also supports hierarchies using the "/" seperator: This allows you to separate things nicely, and the telemetry tools will let you interface with groups of values.

SmartDashboard.putNumber("SystemA/angle",0);

SmartDashboard.putNumber("SystemA/height",0);

SmartDashboard.putNumber("SystemA/distance",0);

SmartDashboard.putNumber("SystemB/angle",0);

While not critical, it is also helpful to recognize that within their appropriate heirarchy, keys are displayed in alphabetical order! Naming things can thus be helpful to organizing and grouping data.

Good Organization -> Faster debugging

As you can imagine, with multiple people each trying to get robot diagnostics, this can get very cluttered. There's a few good ways to make good use of Glass for rapid diagnostics:

- Group your keys using

group/key. All items with the samegroup/value get put into the same subfolder, and easier to track. Often subsystem names make a great group pairing, but if you're tracking something specific, making a new group can help. - Label keys with units: a key called

angleis best when written asangle degree; This ensures you and others don't confuse it withangle rad. - Once you have your grouping and units, add more values! Especially when you have multiple values that should be the same. One of the most frequent ways for a system to go wrong is when two values differ, but shouldn't.

A good case study is an arm: You would have

- An absolute encoder angle

- the relative encoder angle

- The target angle

- motor output

And you would likely have a lot of other systems going on. So, for the arm you would want to organize things something like this

SmartDashboard.putNumber("arm/enc Abs(deg)",absEncoder.getAngle());

SmartDashboard.putNumber("arm/enc Rel(deg)",encoder.getAngle());

SmartDashboard.putNumber("arm/target(deg)",targetAngle);

SmartDashboard.putNumber("arm/output(%)",motor.getAppliedOutput());

A good sanity check is to think "if someone else were to read this, could they figure it out without digging in the code". If the answer is no, add a bit more info.

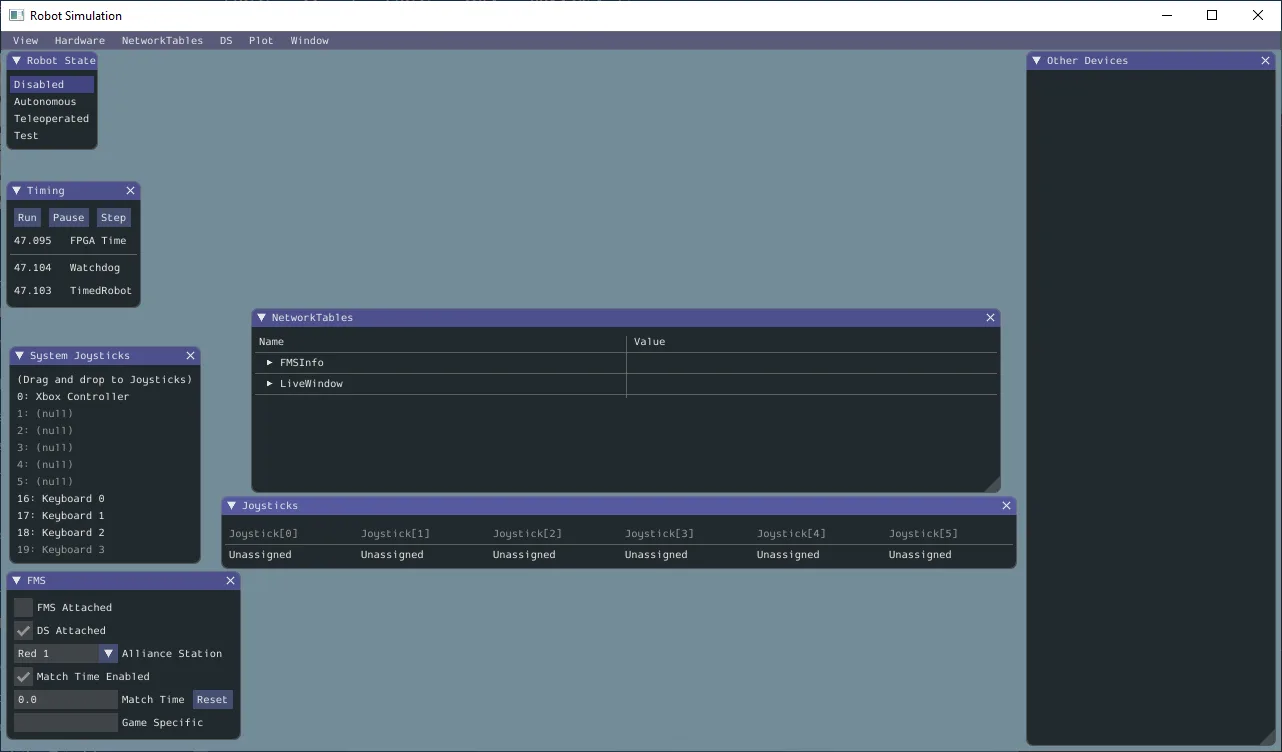

Glass

Glass is our preferred telemetry interface as programmers: It offers great flexibility, easy tracking of many potential outputs, and is relatively easy to use.

Glass does not natively "log" data that it handles though; This makes it great for realtime diagnostics, but is not a great logging solution for tracking data mid-match.

This is a great intro to how to get started with Glass:

https://docs.wpilib.org/en/stable/docs/software/dashboards/glass/index.html

For the most part, you'll be interacting with the NetworkTables block, and adding visual widgets using Plot and the NetworkTables menu item.

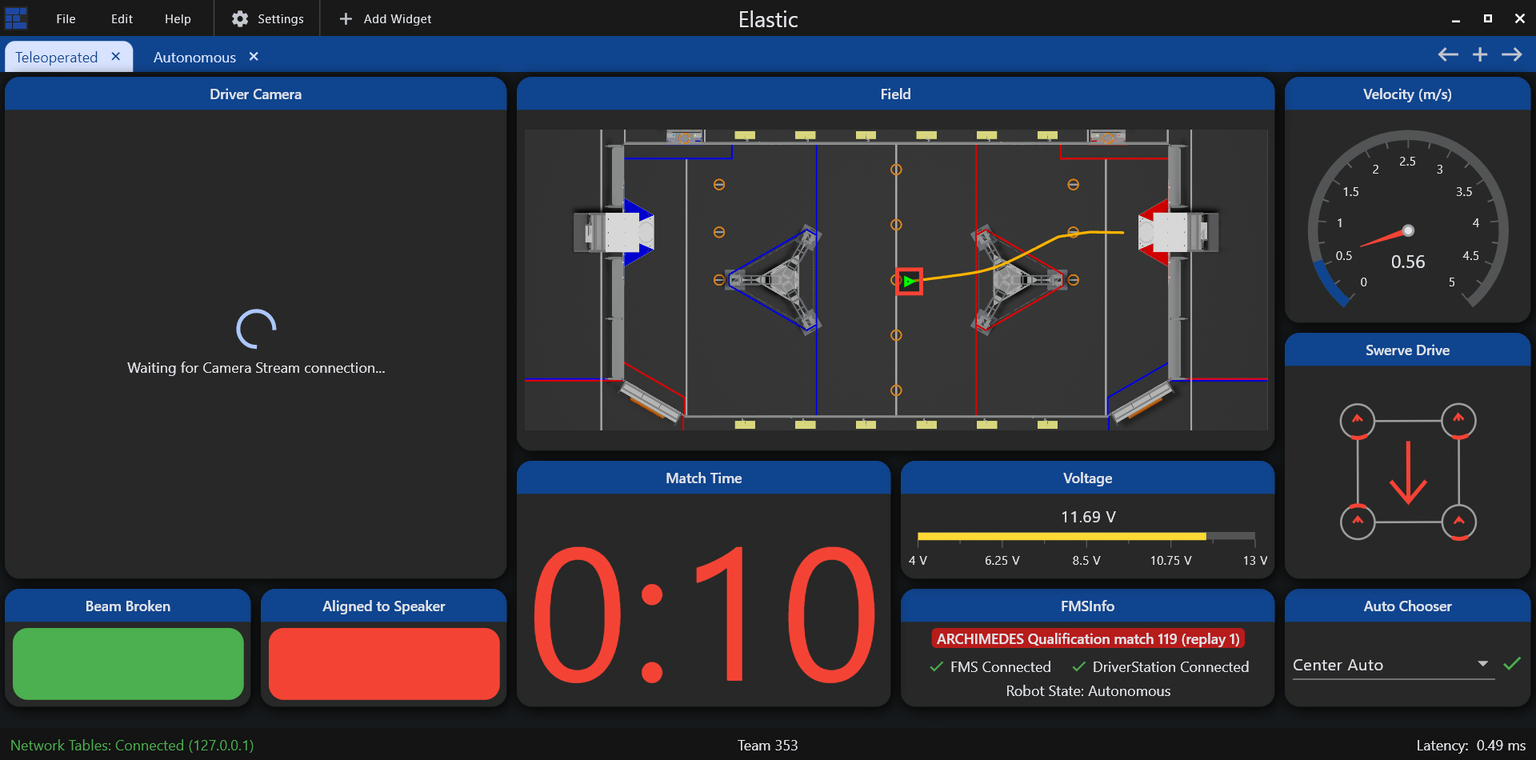

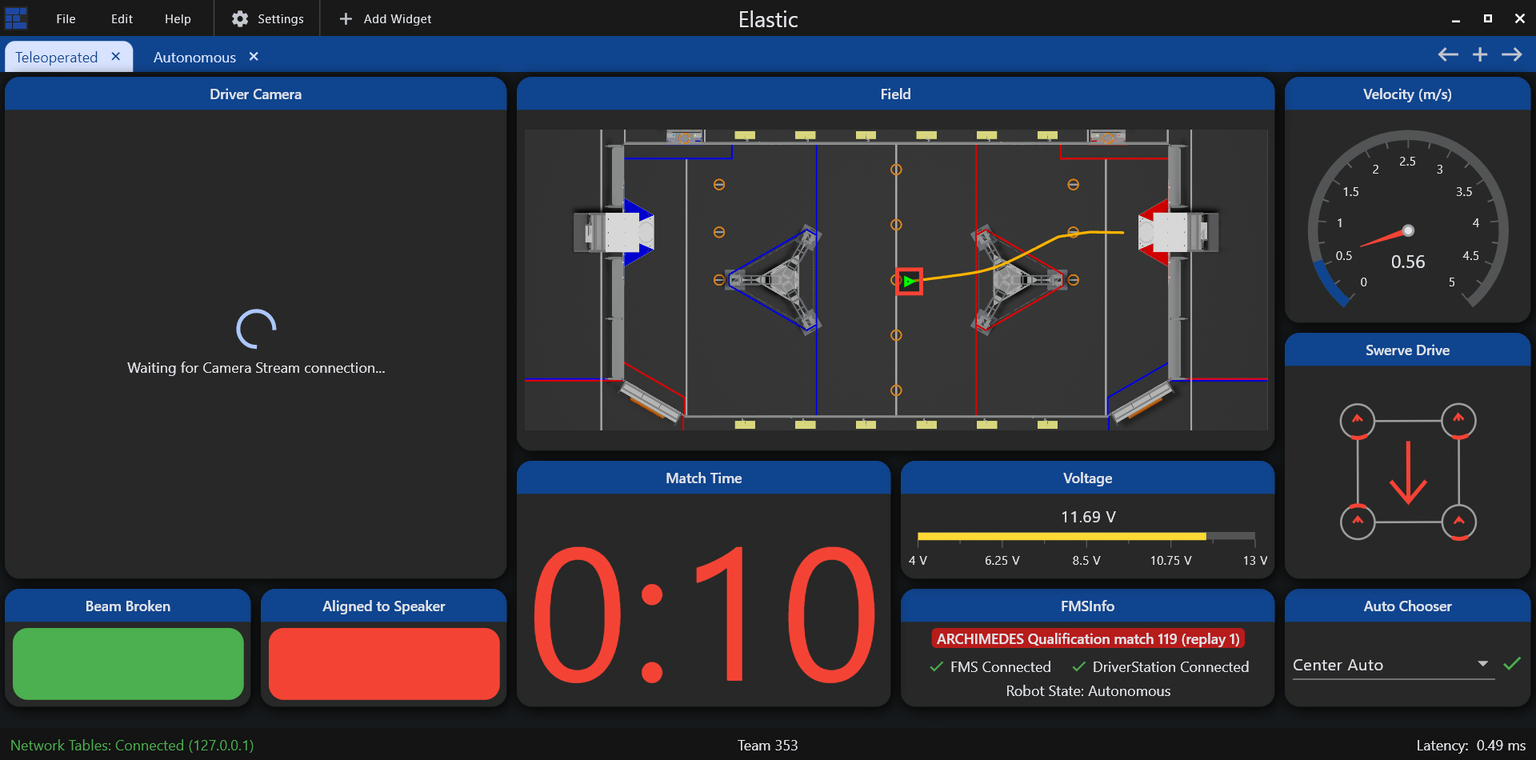

Elastic

Elastic is a telemetry interface oriented more for drivers, but can be useful for programming and other diagnostics. Elastic excels at providing a flexible UI with good at-a-glance visuals for various numbers and directions.

Detailed docs are available here:

https://frc-elastic.gitbook.io/docs

As a driver tool, it's good practice to set up your drivers with a screen according to their preferences, and then make sure to keep it uncluttered. You can go to Edit -> Lock Layout to prevent unexpected changes.

For programming utility, open a new tab, and add widgets and items.

Plotting Data

Motor Control

tags:Requires:Robot Code Basics

Recommends:Commands

Success Criteria

- Spin a motor

- Configure a motor with max current

- Control on/off via joystick button

- Control speed via joystick

Setup and prep

This curriculum does not require or assume solid knowledge of Command structure; It's just about spinning motors. All code here uses existing Example structure that's default on Command Based Robot projects.

However, it's recommended to learn Motor Control alongside or after Commands, as we'll use them for everything afterwards anyway.

This documentation assumes you have the third party Rev Library installed. You can find instructions here.

https://docs.wpilib.org/en/latest/docs/software/vscode-overview/3rd-party-libraries.html

This document also assumes correct wiring and powering of a motor controller. This should be the case if you're using a testbench.

Rev Hardware Client

Java Code

Minimal Example

Conceptually, running a motor is really easy, with just a few lines of code! You can, in fact, run a motor with this.

// ExampleSubsystem.java

ExampleSubsystem extends SubsystemBase{

/// Other code+functions; Ignore for now.

int motorID = 0; //This will depend on the motor you need to run

SparkMax motor = new SparkMax(motorID,MotorType.kBrushless);

public ExampleSubsystem(){}

public void periodic(){

// Will run once every robot loop

// Motors will only run when it's enabled...

// but will *always* run when enabled!

motor.set(0.1);

//Motor range is [-1 .. 1], but we want to run slow for this

}

}

The hard part is always in making it do what you want, when you want.

Practical Example

Most useful robots need a bit more basic setup for the configuration. This example code walks through some of the most common configurations used when making a simple motor system.

// ExampleSubsystem.java

ExampleSubsystem extends SubsystemBase{

/// Other code+functions; Ignore for now.

int motorID = 0; //This will depend on the motor you need to run

SparkMax motor = new SparkMax(motorID,MotorType.kBrushless);

public ExampleSubsystem(){

//First we create a configuration structure

var config = new SparkMaxConfig();

//This is the "passive" mode for the motor.

// Brake has high resistance to turning

// Coast will spin freely.

// Both modes have useful applications

config.idleMode(IdleMode.kBrake);

// This changes the direction a motor spins when you give

// it a positive "set" value. Typically, you want to make

// "positive inputs" correlate to "positive outputs",

// such as "positive forward" or "positive upward"

config.inverted(false);

// Reduce the maximum power output of the motor controller.

// Default is 80A, which is *A LOT* of power!.

// 10 is often a good starting point, and you can go up from there

config.smartCurrentLimit(10);

// This controls the maximum rate the output can change.

// This is in "time between 0 and full output". A low value

// makes a more responsive system.

// However, zero puts a *lot* of mechanical and electrical

// strain on your system when there's turning things on/off.

// Having 0.05 is generally an optimal value for starting.

// Check the [[Slew Rate Limiting]] article for more info!

config.openLoopRampRate(0.05);

//Lastly, we apply the parameters

motor.configure(

config,

ResetMode.kResetSafeParameters,

PersistMode.kNoPersistParameters

);

}

public void periodic(){ }

}

A Controllable Robot

Make it spin

The next goal is to make a motor spin when you press a button, and stop when you release it.

The following examples utilize the Command structure, but do not require understanding it. You can just follow along, or read Commands to figure that out.

The minimum bit of knowledge is that

- Commands are an easy way to get the robot to start/stop bits of code

- Commands are an easy way to attach bits of code to a controller

- The syntax looks a bit weird, that's normal.

Trying to avoid the Command structure entirely is simply a lot of work, or involves a lot of really bad habits and starting points to unlearn later.

We're going to work with some Example code that exists in any new project. The relevant part looks like this.

// ExampleSubsystem.java

ExampleSubsystem extends SubsystemBase{

/// Other code+functions be here

public Command exampleMethodCommand() {

return runOnce(

() -> {

/* one-time action goes here */

});

}

public void periodic(){ }

}

Let's fill in our subsystem with everything we need to get this moving.

// ExampleSubsystem.java

ExampleSubsystem extends SubsystemBase{

int motorID = 0; //This will depend on the motor you need to run

SparkMax motor = new SparkMax(motorID,MotorType.kBrushless);

public ExampleSubsystem(){

var config = new SparkMaxConfig();

// ! Copy all the motor config from the Practical Example above!

// ... omitted here for readability ...

motor.configure(

config,

ResetMode.kResetSafeParameters,

PersistMode.kNoPersistParameters

);

}

public Command exampleMethodCommand() {

return runOnce(

() -> {

motor.set(0.1) // Run at 10% power

});

}

public void periodic(){ }

}

Without any further ado, if you deploy this code, enable the robot, and hit "B", your motor will spin!

CAUTION Note it will not stop spinning! Even when you disable and re-enable the robot, it will continue to spin. We never told it how to stop.

An important detail about smart motor controllers is that they remember the last value they were told to output, and will keep outputting it. As a general rule, we want our code to always provide a suitable output. Commands give us a good tool set to handle this, but for now let's just do things manually.

Making it stop

Next let's make two changes: One, we copy the existing exampleMethodCommand. We'll name the new copy stop, and have it provide an output of 0.

Then, we'll just rename exampleMethodCommand to spin. We should now have two commands that look like this.

// ExampleSubsystem.java

ExampleSubsystem extends SubsystemBase{

// ... All the constructor stuff

public Command spin() {

return runOnce(

() -> {

motor.set(0.1) // Run at 10% power

});

}

public Command stop() {

return runOnce(

() -> {

motor.set(0) // Run at 10% power

});

}

}

We'll also notice that changing the function name of exampleMethodCommand has caused a compiler error in RobotContainer! Let's take a look

public class RobotContainer{

// ... Bunch of stuff here ...

private void configureBindings() {

// ... Some stuff here ...

//The line with a an error

m_driverController.b()

.whileTrue(m_exampleSubsystem.exampleMethodCommand());

}

}

Here we see how our joystick is actually starting our "spin" operation. Since we changed the name in our subsystem, let's change it here too.

We also need to actually run stop at some point; Controllers have a number of ways to interact with buttons, based on the Trigger class.

We already know it runs spin when pressed (true), we just need to add something for when it's released. So, let's do that. The final code should look like

public class RobotContainer{

// ... Bunch of stuff here ...

private void configureBindings() {

// ... Some stuff here ...

m_driverController.b()

.whileTrue(m_exampleSubsystem.spin())

.onFalse(m_exampleSubsystem.stop())

;

}

}

If you deploy this, you should see the desired behavior: It starts when you press the button, and stops when you release it.

Just a heads up: Once we're experienced with Commands we'll have better options for handling stopping motors and handling "default states". This works for simple stuff, but on complex robots you'll run into problems.

Adding a Joystick

So far, we have a start/stop, but only one specific speed. Let's go back to our subsystem, copy our existing function, and introduce some parameters so we can provide arbitrary outputs via a joystick.

Just like any normal function, we can add parameters. In this case, we're shortcutting some technical topics and just passing a whole joystick.

// ExampleSubsystem.java

ExampleSubsystem extends SubsystemBase{

// ... All the constructors and other functions

public Command spinJoystick(CommandXboxController joystick) {

return run(() -> { // Note this now says run, not runOnce.

motor.set(joystick.getLeftY());

});

}

}

Then hop back to RobotContainer. Let's make another button sequence, but this time on A

public class RobotContainer{

// ... Bunch of stuff here ...

private void configureBindings() {

// ... Some stuff here ...

//Stuff for the B button

//our new button

m_driverController.a()

.whileTrue(m_exampleSubsystem.spinJoystick(m_driverController))

.onFalse(m_exampleSubsystem.stop())

;

}

}

Give this a go! Now, while you're holding A, you can control the motor speed with the left Y axis.

Long term, we'll avoid passing joysticks; This makes it very hard to keep tabs on how buttons and joysticks are assigned. However, at this stage we're bypassing Lambdas which are the technical topic needed to do this right.

If you can do this with your Commands knowledge, go ahead and fix it after going through this!

Integrating Command Knowledge

We've dodged a lot of "the right way" to get things moving. If you're coming back here after learning Commands, we can adjust things to better represent a real robot with more solid foundations.

Adding a spin button + Making it stop

Knowing how commands work, you know there's a couple wrong parts here.

- The runOnce is suboptimal; This sets the motor, exits, and makes it hard to detect when you cancel it.

- We don't command the robot at all times during motion; Most of the time it's running it's left as the prior state

- We don't need to add an explicit stop command on our joystick; We have the

endstate of our commands that can help.

Putting those into place, we get

// ExampleSubsystem.java

ExampleSubsystem extends SubsystemBase{

// ... All the constructor stuff

public Command spin() {

return run(() -> {

motor.set(0.1) // Run at 10% power

})

.finallyDo(()->motor.set(0))

;

}

}

This lets us simplify our button a bit, since we now know spin() always stops the motor when we're done.

public class RobotContainer{

// ... Bunch of stuff here ...

private void configureBindings() {

// ... Some stuff here ...

m_driverController.b()

.whileTrue(m_exampleSubsystem.spin())

}

}

As before, our button now starts and stops.

Adding a Joystick

Previously, we just passed the whole joystick to avoid DoubleSuppliers and Lambdas. Let's now add this properly.

// ExampleSubsystem.java

ExampleSubsystem extends SubsystemBase{

// ... All the constructors and other functions

public Command spinJoystick(DoubleSupplier speed) {

return run(() -> {

motor.set(speed.get());

})

.finallyDo(()->motor.set(0))

;

}

}

Then hop back to RobotContainer, and instead of a joystick, pass the supplier function.

public class RobotContainer{

// ... Bunch of stuff here ...

private void configureBindings() {

// ... Some stuff here ...

//Stuff for the B button

//our new button

m_driverController.a()

.whileTrue(m_exampleSubsystem.spinJoystick(m_driverController::getLeftX))

;

}

}

There we go! We've now corrected some of our shortcuts, and have a code structure more suited for building off of.

Differential Drive

tags:

- stubRequires:

Commands

Motor Control

Success Criteria

- Create a Differential Drivetrain

- Configure a Command to operate the drive using joysticks

- ??? Add rate limiting to joysticks to make the system control better

- ??? Add constraints to rotation to make robot drive better

Auto Differential

tags:

- stubSuccess Criteria

- Create a simple autonomous that drives forward and stops

- Create a two-step autonomous that drives forward and backward

- Create a four step autonomous that drives forward, runs a mock "place object" command, backs up, then turns around.

FeedForwards

Requires:

Motor Control

Success Criteria

- Create a velocity FF for a roller system that enables you to set the output in RPM

- Create a gravity FF for a elevator system that holds the system in place without resisting external movement

- Create a gravity FF for an arm system that holds the system in place without resisting external movement

Synopsis

Feedforwards model an expected motor output for a system to hit specific target values.

The easiest example is a motor roller. Let's say you want to run at ~3000 RPM. You know your motor has a top speed of ~6000 RPM at 100% output, so you'd correctly expect that driving the motor at 50% would get about 3000 RPM. This simple correlation is the essence of a feed-forward. The details are specific to the system at play.

Explanation

The WPILib docs have good fundamentals on feedforwards that is worth reading.

https://docs.wpilib.org/en/stable/docs/software/advanced-controls/controllers/feedforward.html

Tuning Parameters

Feed-forwards are specifically tuned to the system you're trying to operate, but helpfully fall into a few simple terms, and straightforward calculations. In many cases, the addition of one or two terms can be sufficient to improve and simplify control.

kS : Static constant

The simplest feedforward you'll encounter is the "static feed-forward". This term represents initial momentum, friction, and certain motor dynamics.

You can see this in systems by simply trying to move very slow. You'll often notice that the output doesn't move it until you hit a certain threshhold. That threshhold is approximately equal to kS.

The static feed-forward affects output according to the simple equation of

kG : Gravity constant

a kG value effectively represents the value needed for a system to negate gravity.

Elevators are the simpler case: You can generally imagine that since an elevator has a constant weight, it should take a constant amount of force to hold it up. This means the elevator Gravity gain is simply a constant value, affecting the output as

A more complex kG calculation is needed for pivot or arm system. You can get a good sense of this by grabbing a heavy book, and holding it at your side with your arm down. Then, rotate your arm outward, fully horizontal. Then, rotate your arm all the way upward. You'll probably notice that the book is much harder to hold steady when it's horizontal than up or down.

The same is true for these systems, where the force needed to counter gravity changes based on the angle of the system. To be precise, it's maximum at horizontal, zero when directly above or below the pivot. Mathematically, it follows the function

This form of the gravity constant affects the output according to

kV : Velocity constant

The velocity feed-forward represents the expected output to maintain a target velocity. This term accounts for physical effects like dynamic friction and air resistance, and a handful of

This is most easily visualized on systems with a velocity goal state. In that case,

In contrast, for positional control systems, knowing the desired system velocity is quite a challenge. In general, you won't know the target velocity unless you're using a Motion Profiles to to generate the instantaneous velocity target.

kA : Acceleration constant

The acceleration feed-forward largely negates a few inertial effects. It simply provides a boost to output to achieve the target velocity quicker.

like

The equations of FeedForward

Putting this all together, it's helpful to de-mystify the math happening behind the scenes.

The short form is just a re-clarification of the terms and their units

A roller system will often simply be

An elevator system will look similar:

Lastly, elevator systems differ only by the cosine term to scale kG.

Of course, the intent of a feed-forward is to model your mechanics to improve control. As your system increases in complexity, and demands for precision increase, optimal control might require additional complexity! A few common cases:

- If you have a pivot arm that extends, your kG won't be constant!

- Moving an empty system and one loaded with heavy objects might require different feed-forward models entirely.

- Long arms might be impacted by motion of systems they're mounted on, like elevators or the chassis itself! You can add that in and apply corrective forces right away.

Feed-forward vs feed-back

Since a feed-forward is prediction about how your system behaves, it works very well for fast, responsive control. However, it's not perfect; If something goes wrong, your feed-forward simply doesn't know about it, because it's not measuring what actually happens.

In contrast, feed-back controllers like a PID are designed to act on the error between a system's current state and target state, and make corrective actions based on the error. Without first encountering system error, it doesn't do anything.

The combination of a feed-forward along with a feed-back system is the power combo that provides robust, predictable motion.

FeedForward Code

WPILib has several classes that streamline the underlying math for common systems, although knowing the math still comes in handy! The docs explain them (and associated warnings) well.

https://docs.wpilib.org/en/stable/docs/software/advanced-controls/controllers/feedforward.html

Integrating in a robot project is as simple as crunching the numbers for your feed-forward and adding it to your motor value that you write every loop.

ExampleSystem extends SubsystemBase(){

SparkMax motor = new SparkMax(...)

// Declare our FF terms and our object to help us compute things.

double kS = 0.0;

double kG = 0.0;

double kV = 0.0;

double kA = 0.0;

ElevatorFeedforward feedforward = new ElevatorFeedforward(kS, kG, kV, kA);

ExampleSubsystem(){}

Command moveManual(double percentOutput){

return run(()->{

var output ;

//We don't have a motion profile or other velocity control

//Therefore, we can only assert that the velocity and accel are zero

output = percentOutput+feedforward.calculate(0,0);

// If we check the math, this feedforward.calculate() thus

// evaluates as simply kg;

// We can improve this by instead manually calculating a bit

// since we known the direction we want to move in

output = percentOutput + Math.signOf(percentOutput) + kG;

motor.set(output);

})

}

Command movePID(double targetPosition){

return run(()->{

//Same notes as moveManual's calculations

var feedforwardOutput = feedforward.calculate(0,0);

// When using the Spark closed loop control,

// we can pass the feed-forward directly to the onboard PID

motor

.getClosedLoopController()

.setReference(

targetPosition,

ControlType.kPosition,

ClosedLoopSlot.kSlot0,

feedforwardOutput,

ArbFFUnits.kPercentOut

);

//Note, the ArbFFUnits should match the units you calculated!

})

}

Command moveProfiled(double targetPosition){

// This is the only instance where we know all parameters to make

// full use of a feedforward.

// Check [[Motion Profiles]] for further reading

}

}

Rev released a new FeedForward config API that might allow advanced feed-forwards to be run directly on controller. Look into it and add examples!

https://codedocs.revrobotics.com/java/com/revrobotics/spark/config/feedforwardconfig

Finding Feed-Forward Gains

When tuning feed-forwards, it's helpful to recognize that values being too high will result in notable problems, but gains being too low generally result in lower performance.

Just remember that the lowest possible value is 0; Which is equivalent to not using that feed forward in the first place. Can only improve from there.

It's worth clarifying that the "units" of feedForward are usually provided in "volts", rather than "percent output". This allows FeedForwards to operate reliably in spite of changes of supply voltage, which can vary from 13 volts on a fresh battery to ~10 volts at the end of a match.

Percent output on the other hand is just how much of the available voltage to output; This makes it suboptimal for controlled calculations in this case.

Finding kS and kG

These two terms are defined at the boundary between "moving" and "not moving", and thus are closely intertwined. Or, in other words, they interfere with finding the other. So it's best to find them both at once.

It's easiest to find these with manual input, with your controller input scaled down to give you the most possible control.

Start by positioning your system so you have room to move both up and down. Then, hold the system perfectly steady, and increase output until it just barely moves upward. Record that value.

Hold the system stable again, and then decrease output until it just barely starts moving down. Again, record the value.

Thinking back to what each term represents, if a system starts moving up, then the provided input must be equal to

Helpfully, for systems where

For pivot/arm systems, this routine works as described if you can calculate kG at approximately horizontal. It cannot work if the pivot is vertical. If your system cannot be held horizontal, you may need to be creative, or do a bit of trig to account for your recorded

Importantly, this routine actually returns a kS that's often slightly too high, resulting in undesired oscillation. That's because we recorded a minimum that causes motion, rather than the maximum value that doesn't cause motion. Simply put, it's easier to find this way. So, we can just compensate by reducing the calculated kS slightly; Usually multiplying it by 0.9 works great.

Finding roller kV

Because this type of system system is also relatively linear and simple, finding it is pretty simple. We know that

We know

This means we can quickly assert that

Finding kV+Ka

Beyond roller kV, kA and kV values are tricky to identify with simple routines, and require Motion Profiles to take advantage of. As such, they're somewhat beyond the scope of this article.

The optimal option is using System Identification to calculate the system response to inputs over time. This can provide optimal, easily repeatable results. However, it involves a lot of setup, and potentially hazardous to your robot when done without caution.

The other option is to tune by hand; This is not especially challenging, and mostly involves a process of moving between goal states, watching graphs, and twiddling numbers. It usually looks like this:

- Identify two setpoints, away from hard stops but with sufficient range of motion you can hit target velocities.

- While cycling between setpoints, increase increase kV until the system generates velocities that match the target velocities. They'll generally lag behind during the acceleration phase.

- Then, increase kA until the acceleration shifts and the system perfectly tracks your profile.

- Increase profile constraints and and repeat until system performance is attained. Starting small and slow prevents damage to the mechanics of your system.

This process benefits from a relatively low P gain, which helps keep the system stable and near the intended setpoints, but running without a PID at all is actually very informative too.

Once your system is tuned, you'll probably want a relatively high P gain, now that you can assert the feed-forward is keeping your error close to zero, but be aware that this can result in slight kV errors resulting in a "jerky" motion. Lowering kV slightly below nominal can help resolve this.

Modelling changing systems

NEEDS TESTING We haven't had to do this much! Be mindful that this section may contain structural errors, or we may find better ways to approach these issues

When considering these systems, remember that they're linear systems: This helpful quirk means we can simply add the system responses together:

ElevatorFeedforward feedforward = new ElevatorFeedforward(kS, kG, kV, kA);

ElevatorFeedforrward ff_gamepiece = new ElevatorFeedforward(kS, kG, kV, kA);

//...

var output = feedforward.calculate(...) + ff_gamepiece.calculate(...)

This lets you respond to things such as a heavy game piece being loaded.

Modelling Complex Systems

As systems increase in complexity and precision requirements, you might need to do more advanced modelling, while keeping in mind the mathematical flexibility of adding and multiplying smaller feed-forward components together!

Here's some examples:

- If your arm extends, you can simply interpolate between a "fully retracted" feedforward and a "fully extended" ones; Thanks to the system linearity, this allows you to model the entire range of values quickly and easily.

- Arm motions can be impacted by the acceleration of a chassis or elevator; While gravity is a constant acceleration (and thus constant force), other non-constant accelerations can also be modeled as part of your feedforward and added to the base calculations.

Footnotes

Note, you might observe that the kCos output,

Motion Profiles

aliases:

- Motion Profile

- Trapezoidal ProfileSuccess Criteria

- Configure a motion system with PID and FeedForward

- Add a trapezoidal motion profile command (runs indefinitely)

- Create a decorated version with exit conditions on reaching the target

- Create a small auto sequence to cycle multiple points

- Create a set of buttons for different setpoints

Synopsis

Motion Profiling is the process of generating smooth, controlled motion. This is typically done using controlled, intermediate setpoints, consideration of the system's physical properties, and other imposed limitations.

In FRC our tooling generally utilizes a Trapazoidal profile, allowing us precise control of system position, maximum system speed, and acceleration applied to the system.

Benefits of Motion profiling

Motion Profiling resolves several problems that can come up with "simpler" control systems such as simple open loop or straight PID control.

The first comes from acknowledgement of the basic physics equation

The next relates to "tuning": The process of adjusting robot parameters to generate consistent motion. If you've gone through the PID tuning process, you probably remember one struggle: Your tuning works great until you move the setpoint a larger distance, at which point it wildly misbehaves, and the system acts erratically. This case is caused by sharp changes in system error, resulting in the PID generating a large output. Motion profiles instead change the setpoint at a controlled rate, ensuring a small system error keeping the PID in a much more stable state.

The extra complexity is worth it! Tuning a system using a Motion Profile is significantly less work than without it, since all the biggest pain points are eliminated. Your PID behaves better, with no overshoot or sharp outputs, and your system

How it works

For these purposes, we're going to discuss a "positional" control system, such as an Elevator or Arm .

Motion Profiles still apply to velocity control Rollers and Flywheels; In those cases, we ignore the position, smoothly accelerating to our target velocity. This means we only see half the benefit on those systems.

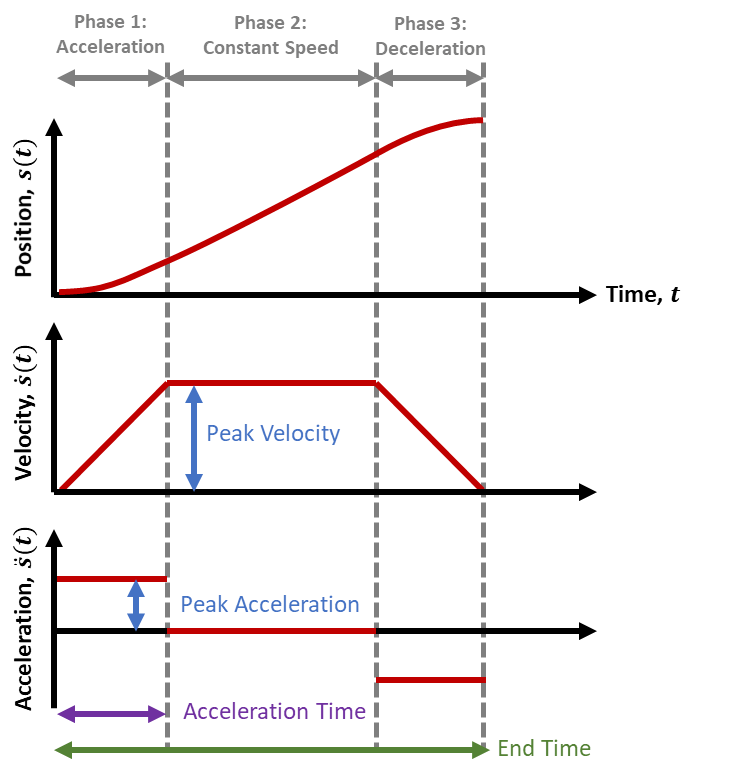

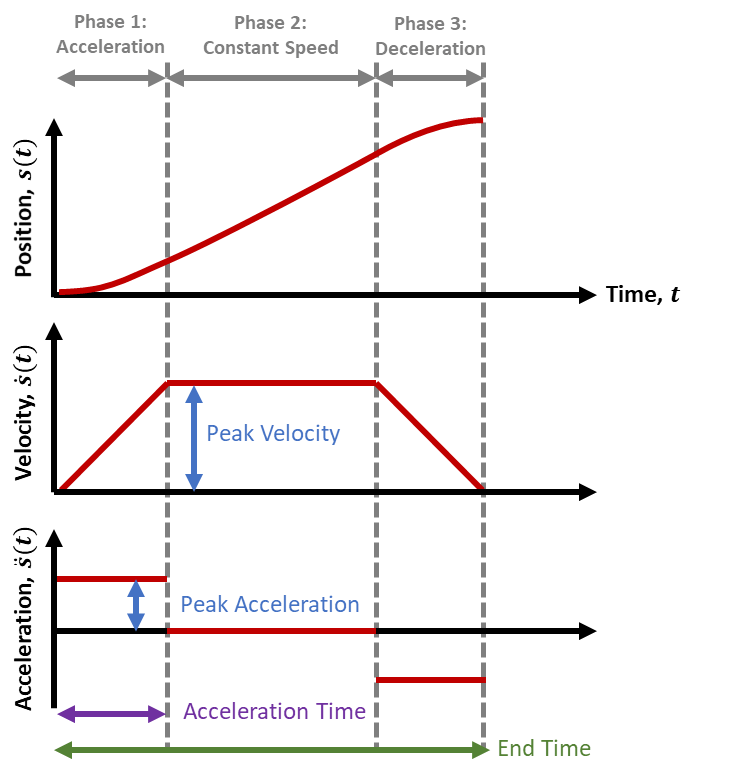

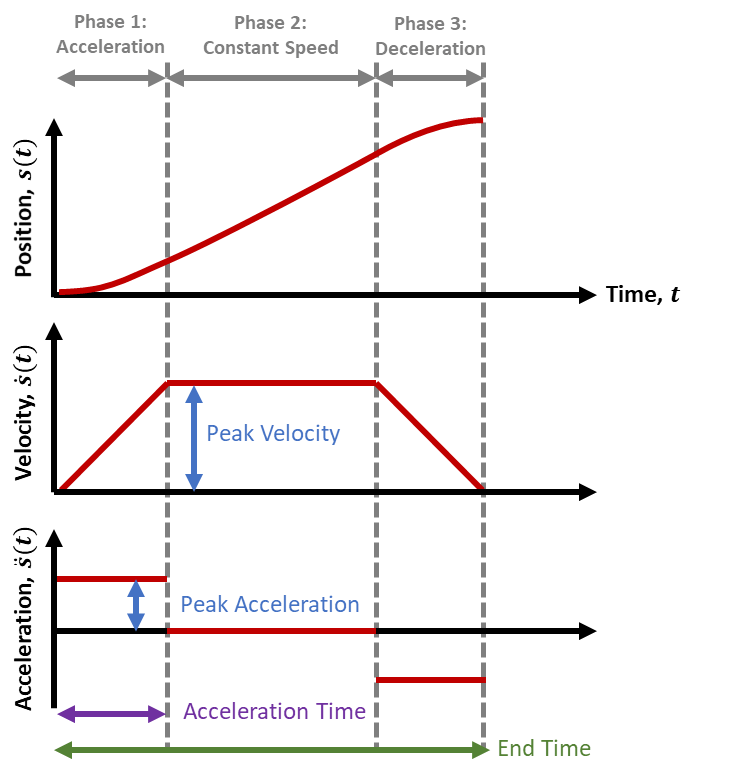

The system we typically use is a "Trapezoidal" profile, named after the shape of the velocity graph it generates.

This system has just two parameters:

- A maximum velocity

- a maximum acceleration.

By having these values set at values the physical system can achieve, our motion profile can split up one large motion into 3 segments.

- An acceleration step, where the motor is approaching the max speed

- Running at our max speed

- Decelerating on approach to the final position.

Because our Motion Profile is aware of our system capabilities, it can then constrain our setpoint changes to ones that that our system can actually achieve, generating a smooth motion without overshooting our final target (or goal).

Since we know how long it takes to accelerate and decelerate, and the max speed, we can also predict exactly how long the whole motion takes!

The entire motion looks just like this:

Finding Max Velocity

A theoretical maximum can be found by multiplying your maximum motor RPM by your gear reduction: It's very similar to configuring your encoder conversion factor, then hitting it with your maximum velocity.

In practice, you normally want to start by setting it slow, at something that you can visually track: Often ~1-2 seconds for a specific range of motion. This helps with testing, since you can see it work and mentally confirm whether it's what you expect.

As you improve and tune your system, you can simply then increase the maximum until your system feels unsafe, and then go back down a bit.

In many practical FRC systems, you may not hit the actual maximum speed of your system: Instead, the system well simply accelerate to the halfway point and then decelerate. This is normal, and simply means the system is constrained by the acceleration.

Finding Max Acceleration

The maximum acceleration effectively represents the actual force we're telling the motor to apply. It's easiest to understand at the extremes:

- When set to zero, your system will never have force applied; It won't move at all.

- When set to infinite, your system assumes it can reach to the max velocity instantly; This is effectively just applying as much force as your system is configured for.

However, in actual systems you want them to move, so zero is useless. And infinite is useful, but impractical: Thinking back to our equation,

This final form helps us clearly see we have a maximum: Our output force is is defined by the motor's max output and our system's mass, giving us a maximum constraint:

Note this is highly simplified: Actually calculating max acceleration this way on real-world systems is often non-trivial, and involves significantly more variables and equations than listed here. However, it's the concept that's important.

In practice, the easiest way to find acceleration is to simply start small increase the acceleration until the system moves how you want. If it starts making concerning noises or over-shooting, then you've gone too far, and you should back it off.

Revisiting the graph

With some background, there's a couple ways to visually interpret this graph:

- This graph always represents the generated profile, but also should reflect your actual position too! When configured correctly, the generated graph is always within our system's capability.

- The calculated "position" setpoint is generally what we feed to our system's PID output.

- The "acceleration" impacts the angle on our velocity trapezoid! At infinite, it's vertical, and at zero, it's horizontal.

Interactions with FeedForwards

Having a motion profile also enables inputs for FeedForwards, allowing even higher levels of precision on your expected outputs.

At the basic level, positional PIDs can be trivially configured with kG and kS, which only depend on values known on all systems. kG is constant or depends on position, and kS depends only on direction of motion.

However, the kV and kA gains both depend on not just on position, but also a known system acceleration and velocity targets.... which we haven't had with arbitrary setpoint jumps.

But now, we can plan our motion: Giving us velocity and acceleration targets. With the right feedforwards, we can now compute the moment-to-moment output for the entire motion in advance!

Interaction with PIDs

The most important part is that our error changes from one big setpoint jump to a lot of small, properly calculated ones.

Without a feed-forward, simply having a motion profile completely removes the big transitions and associated output spikes. This makes tuning simpler, less critical, and often allows for more aggressive PID gains without problems. However, many positional systems will usually still need an I term to accurately hit setpoints accurately without rapid oscillations from a high P term.

With a partial FeedForward (kG + kS) the Feed-Forward handles the base lifting, reducing the need of a PID to handle gravity. This leaves the PID left to handle the motion itself, and it can easily hit setpoint targets without an I term, barring weight changes (such as loaded game pieces)

With a full FeedForward, the error between commanded motion and actual motion will be extremely small, making the PID's impact almost completely irreverent. This makes the PID tuning extremely easy, and the gains can be tuned for precisely the expected disturbances you'll encounter, such as impacts or weight variation.

Note, that despite having theoretically minimal impact, you would still always want a PID to ensure the system gets back to position in cause of error.

Tuning Errors and Adjustments

Fortunately, with motion profiles you get a lot, with very little in the way of potential problems.

Most errors can be easily captured by looking at the position and velocity graph of a real system, and applying a bit of reasoning on what line isn't matching up.

- Overshooting the position setpoint at the end: This likely means your acceleration is too high, or your I gain is too large.

- The system is lagging behind the setpoints and the lines are diverting entirely. It then overshoots the setpoint: Your system cannot output enough power to meet your acceleration constraint. Make sure you have appropriate current limits, and/or lower the acceleration within the system capability.

- The system is lagging behind the setpoints so the lines are parallel but offset: This is typical when not using kA+kV, or when they're set too low. The difference is how long it takes the PID control to kick in and make up for what kA+kV should be generating for that motion.

- The motion is very jerky when travelling at the max speed: This usually means kV is too high, but can be kS being too high. Lowering kP may help as well.

- It starts really awful and smooths out: Likely kA is too high, although again kS and kP can influence this.

Implementing <system type here>

The full system and example code is part of the system descriptions:

The most effective way in general is using the WPILib ProfiledPIDController providing the simplest complete implementation.

This works as a straight upgrade to the standard PID configuration, but takes 2 additional parameters that grant huge performance gains and easier tuning.

ExampleElevator extends SubsystemBase{

SparkMax motor = new SparkMax(42,kBrushless);

//Configure reasonable profile constraints

private final TrapezoidProfile.Constraints constraints =

new TrapezoidProfile.Constraints(kMaxVelocity, kMaxAcceleration);

//Create a PID that obeys those constraints in it's motion

//Likely, you will have kI=0 and kD=0

//Note, the unit of our PID will need to be in Volts now.

private final ProfiledPIDController controller =

new ProfiledPIDController(kP, kI, kD, constraints, 0.02);

//Our feedforward object appropriate for our subsystem of choice

//When in doubt, set these to zero (equivilent to no feedforward)

//Will be calculated as part of our tuning.

private final ElevatorFeedforward feedforward =

new ElevatorFeedforward(kS, kG, kV);

//Lastly, we actually use our new

public Command setHeight(Supplier<Distance> position){

return run(

()->{

//Update our goal with any new targets

controller.setGoal(position.get().in(Inches));

//Calculate the voltage

voltage=

motor.setVoltage(

controller.calculate(motor.getEncoder().getPosition())

+ feedforward.calculate(controller.getSetpoint().velocity)

);

});

}

The big difference compared to our other, simpler PID is that we're using motor.setVoltage for this; This relates to the inclusion of FeedForwards which prefers volts for improved consistency as the battery drains.

While the PID itself doesn't care, since we're adding them together they do need to be the same unit.

If you already calculated your PID gains using motor.set() or

Limelight Basics

tags:

- stubRequires:

Triggers

Basic Telemetry

Success Criteria

- Configure a Limelight to

- Identify an April Tag

- Create a trigger that returns true if a target is in view

- When a target is in view, print the offset between forward and the target

- Estimate the distance to the target

- Configure the LL to identify a game piece of your choice.

- Indicate angle between forward and game piece.

Homing Sequences

aliases:

- HomingHoming is the process of recovering physical system positions, typically using relative encoders.

Part of:

SuperStructure Arm

SuperStructure Elevator

And will generally be done after most requirements for those systems

Success Criteria

- Home a subsystem using a Command-oriented method

- Home a subsystem using a state-based method

- Make a non-homed system refuse non-homing command operations

- Document the "expected startup configuration" of your robot, and how the homing sequence resolves potential issues.

Lesson Plan

- Configure encoders and other system configurations

- Construct a Command that homes the system

- Create a Trigger to represent if the system is homed or not

- Determine the best way to integrate the homing operation. This can be

- Initial one-off sequence on enable

- As a blocking operation when attempting to command the system

- As a default command with a Conditional Command

- Idle re-homing (eg, correcting for slipped belts when system is not in use)

Success Criteria

- Home an elevator system using system current

- home an arm system using system current

- Home a system

What is Homing?

When a system is booted using Relative Encoders, the encoder boots with a value of 0, like you'd expect. However, the real physical system can be anywhere in it's normal range of travel, and the bot has no way to know the difference.

Homing is the process of reconciling this difference, this allowing your code to assert a known physical position, regardless of what position it was in when the system booted.

To Home or not to home

Homing is not a hard requirement of Elevator or Arm systems. As long as you boot your systems in known, consistent states, you operate without issue.

However, homing is generally recommended, as it provides benefits and safeguards

- You don't need strict power-on procedures. This is helpful at practices when the bot will be power cycled and get new uploaded code regularly.

- Power loss protection: If the bot loses power during a match, you just lose time when re-homing; You don't lose full control of the bot, or worse, cause serious damage.

- Improved precision: Homing the system via code ensures that the system is always set to the same starting position.

Homing Methods

When looking at homing, the concept of a "Hard Stop" will come up a lot. A hard stop is simply a physical constraint at the end of a system's travel, that you can reliably anticipate the robot hitting without causing system damage.

In some bot designs, hard stops are free. In other designs, hard stops require some specific engineering design.

Any un-homed system has potential to perform in unexpected ways, potentially causing damage to itself or it's surroundings.

We'll gloss over this for now, but make sure to set safe motor current constraints by default, and only enable full power when homing is complete.

No homing + strict booting process.

With this method, the consistency comes from the physical reset of the robot when first powering on the robot. Humans must physically set all non-homing mechanisms, then power the robot.

From here, you can do anything you would normally do, and the robot knows where it is.

This method is often "good enough", especially for testing or initial bringup. For some robots, gravity makes it difficult to boot the robot outside of the expected condition.

With this method, make sure your code does not reset encoder positions when initializing.

If you do, code resets or power loss will cause a de-sync between the booted position and the operational one. You have to trust the motor controller + encoder to retain positional accuracy.

Current Detection

Current detection is a very common, and reliable method within FRC. With this method, you drive the system toward a hard stop, and monitor the system current.

When the system hits the hard stop, the load on your system increases, requiring more power. This can be detected by polling for the motor current. When your system exceeds a specific current for a long enough time, you can assert that your system is homed! A Trigger is a great tool for helping monitor this condition.

Velocity Detection

Speed Detection works by watching the encoder's velocity. You expect that when you hit the hard stop, the velocity should be zero, and go from there. However, there's some surprises that make this more challenging than current detection.

Velocity measurements can be very noisy, so using a filter is generally required, although debounced Triggers can sometimes work.

This method also suffers from the simple fact that the system velocity will be zero when homing starts. And zero is also the speed you're looking for as an end condition. You also cannot guarantee that the system speed ever increases above zero, as it can start against the hard stop.

As such, you can't do a simple check, but need to monitor the speed for long enough to assert that the system should have moved if it was able to.

Limit Switches

Limit switches are a tried and true method in many systems. You simply place a physical switch at the end of travel; When the bot hits the end of travel, you know where it is.

Limit switches require notable care on the design and wiring to ensure that the system reliably contacts the switch in the manner needed.

The apparent simplicity of a limit switch hides several design and mounting considerations. In an FRC environment, some of these are surprisingly tricky.

- A limit switch must not act as an end stop. Simply put, they're not robust enough to sustain impacts and will fail, leaving your system in an uncontrolled, downward driving stage.

- A limit switch must be triggered at the end of travel; Otherwise, it's possible to start below the switch.

- A switch must have a consistent "throw" ; It should trip at the same location every time. Certain triggering mechanisms and arms can cause problems.

- If the hard stop moves or is adjusted, the switch will be exposed for damage, and/or result in other issues

Because of these challenges, limit switches in FRC tend to be used in niche applications, where use of hard stops is restricted. One such case is screw-driven Linear Actuators, which generate enormous amounts of force at very low currents, but are very slow and easy to mount things to.

Switches also come in multiple types, which can impact the ease of design. In many cases, a magnetic hall effect sensor is optimal, as it's non-contact, and easy to mount alongside a hard stop to prevent overshoot.

Most 3D printers use limit switches, allowing for very good demonstrations of the routines needed to make these work with high precision.

For designs where hard stops are not possible, consider a Roller Arm Limit Switch and run it against a CAM. This configuration allows the switch to be mounted out of the line of motion, but with an extended throw.

Index Switches

Index switches work similarly to Limit Switches, but the expectation is that they're in the middle of the travel, rather than at the end of travel. This makes them unsuitable as a solo homing method, but useful as an auxiliary one.

Index switches are best used in situations where other homing routines would simply take too long, but you have sufficient knowledge to know that it should hit the switch in most cases.

This can often come up in Elevator systems where the robot starting configuration puts the carriage far away from the nearest limit.

In this configuration, use of a non-contact switch is generally preferred, although a roller-arm switch and a cam can work well.

Absolute Position Sensors

In some cases we can use absolute sensors such as Absolute Encoders, Gyros, or Range Finders to directly detect information about the robot state, and feed that information into our other sensors.

This method works very effectively on Arm based systems; Absolute Encoders on an output shaft provide a 1:1 system state for almost all mechanical designs.

Elevator systems can also use these routines using |Range Finders , detecting the distance between the carriage and end of travel.

Clever designers can also use Absolute Encoders for elevators in a few ways

- You can simply assert a position within a narrow range of travel

- You can gear the encoder to have a lower resolution across the full range of travel. Many encoders have enough precision that this is perfectly fine.

- You can use multiple absolute encoders to combine the above global + local states

For a typical system using Spark motors and Through Bore Encoders, it looks like this:

public class ExampleSubsystem{

SparkMax motor = new Sparkmax(/*......*/);

ExampleSubsystem(){

SparkBaseConfig config = new SparkMaxConfig();

//Configure the motor's encoders to use the same real-world unit

armMotor.configure(config,/***/);

//We can now compare the values directly, and initialize the

//Relative encoder state from the absolute sensor.

var angle = motor.getAbsoluteEncoder.getPosition();

motor.getEncoder.setPosition(angle);

}

}

Time based homing

A relatively simple routine, but just running your system with a known minimum power for a set length of time can ensure the system gets into a known position. After the time, you can reset the encoder.

This method is very situational. It should only be used in situations where you have a solid understanding of the system mechanics, and know that the system will not encounter damage when ran for a set length of time. This is usually paired with a lower current constraint during the homing operation.

Backlash-compensated homing

In some cases you might be able to find the system home state (using gravity or another method), but find backlash is preventing you from hitting desired consistency and reliability.

This is most likely to be needed on Arm systems, particularly actuated Shooter systems. This is akin to a "calibration" as much as it is homing.

In these cases, homing routines will tend to find the absolute position by driving downward toward a hard stop. In doing so, this applies drive train tension toward the down direction. However, during normal operation, the drive train tension will be upward, against gravity.

This gives a small, but potentially significant difference between the "zero" detected by the sensor, and the "zero" you actually want. Notably, this value is not a consistent value, and wear over the life of the robot can impact it.

Similarly, in "no-homing" scenarios where you have gravity assertion, the backlash tension is effectively randomized.

To resolve this, backlash compensation then needs to run to apply tension "upward" before fully asserting a fully defined system state. This is a scenario where a time-based operation is suitable, as it's a fast operation, from a known state. The power applied should also be small, ideally a large value that won't cause actual motion away from your hard stop (meaning, at/below kS+kG ).

For an implementation of this, see CalibrateShooter from Crescendo.

Online position recovery

Nominally, homing a robot is done once at first run, and from there you know the position. However, sometimes the robot has known mechanical faults that cause routine loss of positioning from the encoder's perspective. However, other sensors may be able to provide insight, and help correct the error.

This kind of error most typically shows up in belt or chain skipping.

To overcome these issues, what you can do is run some condition checking alongside your normal runtime code, trying to identify signs that the system is in a potentially incorrect state, and correcting sensor information.

This is best demonstrated with examples:

- If you home a elevator to the bottom of a drive at position 0, you should never see encoder values be negative. As such, seeing a "negative" encoder value tells you that the mechanism has hit end of travel.

- If you have a switch at the limit of travel, you can just re-assert zero every time you hit it. If there's a belt slip, you still end up at zero.

- If an arm should rest in an "up" position, but the slip trends to push it down, retraction failures might have no good detection modes. So, simply apply a re-homing technique whenever the arm is in idle state.

Online Position Recovery is a useful technique in a pinch. But, as with all other hardware faults, it's best to fix it in hardware. Use only when needed.

If the system is running nominally, these techniques don't provide much value, and can cause other runtime surprises and complexity, so it's discouraged.

In cases where such loss of control is hypothetical or infrequent, simply giving drivers a homing/button tends to be a better approach.

Modelling Un-homed systems in code

When doing homing, you typically have 4 system states, each with their own behavior. Referring it to it as a State Machine is generally simpler

Unhomed

The UnHomed state should be the default bootup state. This state should prepare your system to

- A boolean flag or state variable your system can utilize

- Safe operational current limits; Typically this means a low output current or speed control.

It's often a good plan to have some way to manually trigger a system to go into the Unhomed state and begin homing again. This allows your robot drivers to recover from unexpected conditions when they come up. There's a number of ways your robot can lose position during operation, most of which have nothing to do with software.

Homing

The Homing state should simply run the desired homing strategy.

Modeling this sequence tends to be the tricky part, and a careless approach will typically reveal a few issues

- Modelling the system with driving logic in the subsystem and Periodic routine typically clashes with the general flow of the Command structure.

- Modelling the Homing as a command can result in drivers cancelling the command, leaving the system in an unknown state

- And, trying to continuously re-apply homing and cancellation processes can make the drivers frustrated as the system never gets to the known state.

- Trying to make many other commands check homing conditions can result in bugs by omission.

The obvious takeaway is that however you home, you want it to be fast and preferably completed before the drivers try to command the system. Working with your designers can streamline this process.

Use of the Command decorator withInterruptBehavior(...) allows an easy escape hatch. This flag allows an inversion of how Command are scheduled; Instead of new commands cancelling running ones, this allows your homing command to forcibly block others from getting scheduled.

If your system is already operating on an internal state machine, homing can simply be a state within that state machine.

Homed

This state is easy: Your system can now assert the known position, set your Homed state, apply updated power/speed constraints, resume normal operation.

Example Implementations

Command Based

Conveniently, the whole homing process actually fits very neatly into the Commands model, making for a very simple implementation

init()represents the unhomed state and resetexecute()represents the homing stateisFinished()checks the system state and indicates completionend(cancelled)can handle the homed procedure

This example implements a a "current draw detection" strategy:

class ExampleSubsystem extends SubsystemBase(){

SparkMax motor = ....;

private boolean homed=false;

ExampleSubsystem(){

motor.setMaxOutputCurrent(4); // Will vary by system

}

public Command goHome(){

return new FunctionalCommand(

()->{

homed=false;

motor.setMaxOutputCurrent(4);

},

()->{motor.set(-0.5);},

()->{return motor.getAppliedCurrent()>3}, //isFinished

(cancelled)->{

if(cancelled==false){

homed = true;

motor.setMaxOutputCurrent(30);

}

};

)

//Optionally: prevent other commands from stopping this one

//This is a *very* powerful option, and one that

//Should only be used when you know it's what you want.

.withInterruptBehavior(kCancelIncoming)

// Failsafe in case something goes wrong,since otherwise you

// can't exit this command by button mashing

.withTimeout(5);

}

}

This command can then be inserted at the start of autonomous, ensuring that your bot is always homed during a match. It also can be easily mapped to a button, allowing for mid-match recovery. If needed, it can also be broken up into a slightly more complicated command sequence.

For situations where you won't be running an auto (typical testing and practice field scenarios), the use of Triggers can facilitate automatic checking and scheduling

class ExampleSubsystem extends SubsystemBase(){

ExampleSubsystem(){

Trigger.new(Driverstation::isEnabled)

.and(()->isHomed==false)

.whileTrue(goHome())

}

}

Alternatively, if you don't want to use the withInterruptBehavior(...) option, you can consider hijacking other command calls with Commands.either(...) or new ConditionalCommand(...)

class ExampleSubsystem extends SubsystemBase(){

/* ... */

//Intercept commands directly to prevent unhomed operation

public Command goUp(){

return either(

stop(),

goHome(),

()->isHomed

}

/* ... */

While generally not preferable, a DefaultCommand and the either/ConditionalCommand notation can be used to initiate homing. This is typically not recommended due to defaultCommands having an implicit low priority, while homing is a very high priority task.

Gyro Sensing

tags:

- stubSuccess Criteria

-

Configure a NavX or gyro on the robot

-

Find a way to zero the sensor when the robot is enabled in auto

-

Create a command that tells you when the robot is pointed the same way as when it started

-

Print the difference between the robot's starting angle and current angle

-

TODO

-

what's an mxp

-

what port/interface to use, usb

-

which axis are you reading

Absolute Encoders

Absolute encoders are sensors that measure a physical rotation directly. These differ from Relative Encoders due to measurement range, as well as the specific data they provide.

Success Criteria

- Take testbench, define a range of motion with measurable real-world angular units.

- Configure an absolute encoder to report units of that range

- Validate that the reported range of the encoder is accurate over the fully defined range of motion.

- Validate the

Differences to Relative encoders

If we recall how Relative Encoders work, they tell us nothing about the system until verified against a reference. Once we have a reference and initialize the sensor, then we can track the system, and compute the system state.

In contrast, absolute encoders are designed to capture the full system state all at once, at all times. When set up properly, the sensor itself is a reference.

Similarities to Relative Encoders

Both sensors track the same state change (rotation), and when leveraged properly, can provide complete system state information

Mechanical Construction

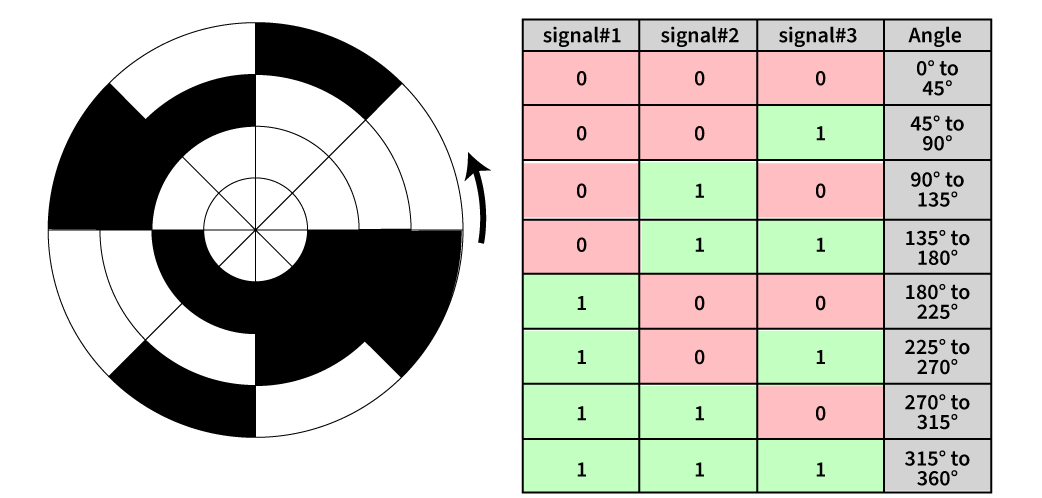

While the precise construction can vary, many absolute encoders tend to work in the same basic style: divide your measured distance into two regions. Then divide those two regions into two more regions each, and repeat as many times as needed to get the desired precision!

When you do this across a single rotation, you get a simple binary encoder shown here:

With 3 subdivisions, you can divide the circle in

Commonly, you'll see encoders with one of the following resolutions.

| a | b | c | |

| 1 | Resolution (bits) | divisions | Resolution (degrees) |

|---|---|---|---|

| 2 | 8 | 256 | 1.40 |

| 3 | 10 | 1024 | 0.35 |

| 4 | 11 | 2048 | 0.17 |

| 5 | 12 | 4096 | .09 |

Reading Absolute Encoders

The typical encoder we use in FRC is the Rev Through Bore Encoder . This is a 10 bit encoder, and provides interfaces by either

- Plugging it into the Spark Max

- Plugging it into the RoboRio's DIO port.

Connected as a RoboRio DIO device

When plugged into the RoboRio, you can interface with it using the DutyCycleEncoder class and associated features.

public class ExampleSubsystem{

// Initializes a duty cycle encoder on DIO pins 0

// Configure it to to return a value of 4 for a full rotation,

// with the encoder reporting 0 half way through rotation (2 out of 4)

DutyCycleEncoder encoder = new DutyCycleEncoder(0, 4.0, 2.0);

//... other subsystem code will be here ...

public void periodic(){

//Read the encoder and print out the value

System.out.println(encoder.get());

}

}

Real systems will likely use encoder ranges of 2*Math.PI (for Radians) or 360 (for degrees).

The "zero" value will depend on your exact system, but should be the encoder reading when your system is at a physical "zero" value. In most cases, you'd want to correlate "physical zero" with an arm horizontal, which simplifies visualizing the system, and calculations for FeedForwards for Arm subsystems later. However, use whatever makes sense for your subsystem, as defined by your Robot Design Analysis's coordinate system.

Connected as a Spark Max device

When a Through Bore Encoder is connected to a Spark, it'll look very similar to connecting a Relative Encoder in terms of setting up the Spark and applying/getting config, with a few new options

ExampleSubsystem extends SubsystemBase{

SparkMax motor = new SparkMax(10, MotorType.kBrushless);

// ... other stuff

public void ExampleSubsystem(){

SparkBaseConfig config = new SparkMaxConfig();

//Configure the reported units for one full rotation.

// The default factor is 1, measuring fractions of a rotation.

// Generally, this will be 360 for degrees, or 2*Math.PI for radians

var absConversionFactor=360;

config.absoluteEncoder

.positionConversionFactor(absConversionFactor);

//The velocity defaults to units/minute ; Units per second tends to

//preferable for FRC time scales.

config.absoluteEncoder

.velocityConversionFactor(absConversionFactor / 60.0);

//Configure the "sensor phase"; If a positive motor output

//causes a decrease in sensor output, then we want to set the

// sensor as "inverted", and would change this to true.

config.absoluteEncoder

.inverted(false);

motor.configure(

config,

ResetMode.kResetSafeParameters,

PersistMode.kPersistParameters

);

}

// ... other stuff

public void periodic(){

//And, query the encoder for position.

var angle = motor.getAbsoluteEncoder().getPosition();

var velocity = motor.getAbsoluteEncoder.getVelocity();

// ... now use the values for something.

}

}

Discontinuities

Remember that the intent of an absolute encoder is to capture your system state directly. But what happens when your system can exceed the encoder's ability to track it?

If you answered "depends on the way you track things", you're correct. By their nature absolute encoders have a "discontinuity"; Some angle at which they jump from one end of their valid range to another. Instead of [3,2,1,-1,-2] you get [3,2,1,359,358]! You can easily imagine how this messes with anything relying on those numbers..

For a Through Bore + Spark configuration, by default it measures "one rotation", and the discontinuity matches the range of 0..1 rotations , or 0..360 degrees with a typical conversion factor. This convention means that it will not return negative values when read through motor.getAbsoluteEncoder().getPosition() !

Unfortunately, this convention often puts the discontinuity directly in range of motion, meaning we have to deal with it frequently. PID controllers especially do not like discontinuities in their normal range.

Ideally, we can move the discontinuity somewhere we don't cross it due to physical hardware constraints.

There's a few approaches you can use to resolve this, depending on exactly how your system should work, and what it's built to do!

Zero Centering

This is the easiest and probably ideal solution for many systems. The Spark has a method that changes the system from reporting [0..1)rotations to (-0.5..0.5]. rotations. Or, with a typical conversion factor applied, (-180..180] degrees.

ExampleSubsystem extends SubsystemBase{

// ... other stuff

public void ExampleSubsystem(){

SparkBaseConfig config = new SparkMaxConfig();

config.absoluteEncoder.zeroCentered(true);

// .. other stuff

}

}

Most FRC systems won't have a range of 180 degrees, making this a very quick and easy fix.

Rev documentation makes it unclear if zeroCentered(true) works as expected with the onboard Spark PID controller.

If you test this, report back so we can replace this warning with correct information.

Handle the Discontinuity in your Closed Loop

Since this is common, some PID or Closed Loop controllers can simply take the discontinuity directly in their configuration. This bypasses the need to fix it on the sensor side.

For Sparks, the configuration option is as follows:

sparkConfig.closedLoop.positionWrappingInputRange(min,max);

Be mindful of how setpoints are wrapped when passed to the controller! Just because the sensor is wrapped, doesn't mean it also handles setpoint values too.

If the PID is given an unreachable setpoint due to sensor wrapping, it can generate uncontrolled motion. Make sure you check and use wrapper functions for setpoints as needed.

Handle the discontinuity in a function

In some cases, you can just avoid directly calling motor.getAbsoluteEncoder().getPosition(), and instead go through a function to handle the discontinuity. This usually looks like this

// In a subsystem using an absolute encoder

private double getAngleAbsolute(){

double absoluteAngle = motor.getAbsoluteEncoder().getPosition();

// Mote the discontinuity from 0 to -90

if(absoluteAngle>270){

absoluteAngle-=360;

}

return absoluteAngle;

}

This example gives us a range of -90 to 270, representing a system that could rotate anywhere but straight downward.

This pattern works well for code aspects that live on the Roborio, but note this doesn't handle things like the onboard Spark PID controllers! Those still live with the discontinuity, and would cause problems.

Transfer the reading to a relative encoder

Instead of using the Absolute encoder as it's own source of angles, we simply refer to the Relative Encoder. In this case, both encoders should be configured to provide the same measured unit (radians/degrees/rotations of the system), and then you can simply read the value of the absolute, and set the state of the relative.

More information for this technique is provided at Homing Sequences, alongside other considerations for transferring data between sensors like this.

Build Teams, Code, and Encoders

Since an absolute encoder represents a physical system state, an important consideration is preserving the physical link between system state and the sensor.

On the Rev Through Bore, the link between system state and encoder state is maintained by the physical housing, and the white plastic ring that connects to a hex shaft.

You can see that the white hex ring has a small notch to help track physical alignment, as does the black housing. The notch's placement itself is unimportant; However, keeping the notch consistency aligned is very important!

If we take a calibrated, working system, but then re-assemble it it incorrectly, we completely skew our system's concept of what the physical system looks like. Let's take a look at an example arm.

We can see in this case we have a one-notch error, which is 60 degrees. This means that the system thinks the arm is pointing up, but the arm is actually still rather low. This is generally referred to as a "clocking" error.

When we feed an error like this into motor control tools like a PID, the discrepancy means the system will be driving the arm well outside your expected ranges! This can result in significant damage if it catches you by surprise.

As a result, it's worth taking the time and effort to annotate the expected alignment for the white ring and the other parts of the system. This allows you to quickly validate the system in case of rework or disassembly.

Ideally, build teams should be aware of the notch alignment and it's impact! While you can easily adjust offsets in code, such offsets have to ripple through all active code branches and multiple users, which can generate a to a fair amount of disruption. However, in some cases the code disruption is still easier to resolve than further disassembling and re-assembling parts of the robot. It's something that's bound to happen at some point in the season.

Further Reading

Grey Code